Getting started with VCF 4.0 Part 2 – Commission hosts, Create Workload Domain, Deploy NSX-T Edge

Now that a VCF 4.0 Management Domain has been deployed, we can move onto creating our very first VCF 4.0 Virtual Infrastructure Workload Domain (VI WLD). We will require a VI WLD with an NSX-T Edge cluster before we can deploy Kubernetes on vSphere (formerly known as Project Pacific). Not too much has changed in the WLD creation workflow since version 3.9. We still have to commission ESXi hosts before we can create the WLD. But something different to previous versions of VCF is that today in VCF 4.0 we can automatically provision NSX-T Edge clusters from SDDC Manager to a WLD.

Now that a VCF 4.0 Management Domain has been deployed, we can move onto creating our very first VCF 4.0 Virtual Infrastructure Workload Domain (VI WLD). We will require a VI WLD with an NSX-T Edge cluster before we can deploy Kubernetes on vSphere (formerly known as Project Pacific). Not too much has changed in the WLD creation workflow since version 3.9. We still have to commission ESXi hosts before we can create the WLD. But something different to previous versions of VCF is that today in VCF 4.0 we can automatically provision NSX-T Edge clusters from SDDC Manager to a WLD.

If you’re not familiar with NSX-T Edge clusters, this is how one of my colleagues succinctly described them (thanks Oliver) – “These automatically deployed NSX-T Edge nodes through VCF are service appliances with pools of capacity to be consumed for centralized network and security services. NSX-T Edge nodes configured as Edge Transport Nodes are added to a NSX-T Edge cluster which are then assigned to logical T0 and/or T1 Gateways, where the centralized network or security services are realized.” Let’s see how to do that in this post.

Disclaimer: “To be clear, this post is based on a pre-GA version of the VMware Cloud Foundation 4.0. While the assumption is that not much should change between the time of writing and when the product becomes generally available, I want readers to be aware that feature behaviour and the user interface could still change before then.”

Commission Hosts

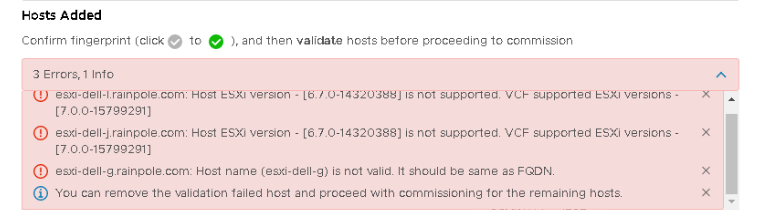

The first step is to commission some ESXi hosts so that they can be used for the VI WLD. I am going to use vSAN as the primary storage in this workload domain so I need to commission a minimum of 3 hosts. For enhanced protection during maintenance and failures, to leverage vSAN self-healing, and to consume additional vSAN features (e.g. erasure coding), you might consider adding more than 3 hosts to the vSAN cluster. The commissioning method hasn’t changed much since my previous post on host commissioning using version 3.9 so I won’t detail all of the steps involved. However there is a nice new enhancement to call out in version 4.0. In the past, if a validation step failed, you have to check the logs to find the reason for the validation not succeeding. Now the reason for validation failing is displayed in the UI, which is a great improvement. Just by way of demonstration, I tried to commission hosts that were not running ESXi version 7.0, or did not have their FQDN set correctly. You can see the validation errors clearly displayed in the Commission Hosts UI:

As you can see, the reasons for validation failing are pretty obvious. I addressed the issue highlighted above, and completed the commission process. Now that I have some ESXi 7.0 hosts commissioned, I can continue with building my VI workload domain.

Create VI Workload Domain

This process, once again, is very similar to previous workload domain creations, so I am not going to show all of the steps. If you want to see how to create a VI workload domain, step by step, check out my earlier post on how to do so with VCF 3.9. The one big difference, however, is the introduction of NSX-T automation. For WLD networking in VCF 4.0, another set of 3 x NSX-T managers are now deployed in the Management Domain on behalf of this VI Workload Domain. Just as we we saw with the Management Domain deployment, the ESXi 7.0 hosts in the workload domain are also automatically added as Transport Nodes during the NSX-T deployment, with both an Overlay Transport Zone and VLAN Transport Zone created as well. If you log in to NSX-T manager after the WLD deployment, you should see the ESXi hosts as transport nodes successfully configured as follows:

One other thing that is interesting to note is that the deployment creates anti-affinity VM/Host rules to ensure that the different NSX-T managers are placed on different hosts. This means that should a host fail in the WLD, it will only impact a single NSX-T manager. This is true for both the NSX-T deployments on both the management domain and the workload domains.

Note that NSX-T will use DHCP to assign IP addresses to the host TEPs. If there is no DHCP, IP addresses from the 169.254.0.0/16 network will be used to configure the hosts (link-local IPv4 address in accordance with RFC 3927). You will need to ensure that there is a DHCP server available to provide IP addresses on the VLAN chosen for the host TEP.

Now we come to a section that is a major change compared to earlier versions of VCF. Regular readers might be aware that I spent a considerable amount of time documenting how to deploy an NSX-T 2.5 Edge cluster to a WLD in VCF 3.9. I documented how you could do it with BGP, and also with static routes. It was a lot of work so I’m delighted to announce that this procedure has been completely automated in VCF 4.0. Let’s see how.

Deploy NSX-T Edge Cluster to Workload Domain

To begin the task of deploying an NSX-T Edge Cluster to your Workload Domain (WLD), simply select the WLD, and from the Actions drop-down, select ‘Add Edge Cluster’:

Next, you will be presented with the list of prerequisites. Most importantly, the host TEP (tunnel) VLAN which was assigned when the Workload Domain was initially created must be a different VLAN ID to that used for the Edge TEPs (tunnels). This has caught me out before, so be sure to have 2 different VLANs available. Note that these VLANs must be routed to each another. Check all the prerequisites check-boxes to continue.

In the General Cluster Info page, provide information such as MTU, ASN, T0 and T1 Logical Router names as well as passwords for the various Edge components.

In the Use Case section, specify the use case for the Edge. In my case, it is being used for Workload Management (for Kubernetes on vSphere). This automatically sets the Edge Form Factor to Large the T0 Service HA setting must be Active-Active. There is no option to change this. I am also going to select Static Routing and not EBGP in this example. It is fantastic that this automated deployment supports both methods.

Note that even though BGP is not selected, it will still be enabled on the T0 Logical Router in the NSX-T Configuration. Also note that in this release the static routes are not configured from the wizard. These would need to be added to the T0 Logical Router via the NSX Manager once it has been deployed. A refresher on how to do that is available here. You will most certainly want to add a default static route (0.0.0.0/0) and a SNAT rule to enable your Pods to reach externally and be able to pull images from google, docker hub or which ever external repository you wish to use. [Update] I had an interesting discussion is about using Active/Active mode with Static Routes. Some feedback I received is that Active/Passive might be a better approach as this provide a HA Virtual IP which can be used as the gateway when configuring static routes on desktops and workstations. If one of the Edges fail, then no additional action would be needed when the passive Edge took over. With Active/Active, there is no Virtual IP, so any configured routes on desktops/workstations would need to be updated to use the other Edge uplink as the gateway. Its an interesting observation, and I would be interest in any other takes on this approach – please leave a comment.

In Edge Node Details, information about both of the Edge nodes that make up the Edge cluster is provided. We can think of the Edge as using overlays for East-West traffic and uplinks for North-South traffic. The ESXi hosts in my WLD use VLAN 80 for their host overlay (TEP) network. Since I cannot use the same VLAN for the Edge overlay, I am using VLAN 70 instead. Note, as previously mentioned, that there needs to be a route between both of these VLANs or the overlays/tunnels will not form correctly. One other point to make is that the Edge TEPs must be assigned static IP addresses – Edges do not support DHCP allocated IP addresses for the TEPs.

Lower down in the window, you need to provide uplink information such as VLAN and IP address. Here is a complete view of the uplink section. When it has been fully populated, click Add Edge Node.

And after completing the process of adding the first Edge node, add the information for the second Edge node. Once both have been added, we can continue.

Review your settings in the Summary page and then proceed to the validation section.

If validation succeeds, we can finish the wizard and begin the NSX-T Edge deployment:

From SDDC Manager, we can monitor the tasks associated with the deployment of an NSX-T Edge Cluster for our Workload Domain.

All going well, you should be able to observe the successful deployment of both NSX-T Edge nodes in NSX-T manager. Of course a number of other items are set up as well such as profiles, network segments, etc.

Excellent. We have a VI WLD setup and we have an NSX-T Edge deployment in the WLD. Everything is now in place to allow us to start with our final goal, the deployment of a vSphere with Kubernetes workload domain. That will be the subject of my next blog post which will be coming up in a day or so.

To learn more about VMware Cloud Foundation 4, check out the complete VCF 4 Announcement here. To learn more about vSAN 7 features, check out the complete vSAN 7 Announcement here.

Hi Cormac

Greetings from Warsaw. I hope you are doing well.

I have million questions regarding this presentation – the use case screen alone makes me want to spend like 30 minutes discussing the options 🙂

It would be great if you could write a bit about not only what you choose, but also why did you choose that options and why not the alternatives.

Is this NSX-T 3 ? I suppose it is tags considering, but I’d like to see your confirmation.

Is this Workload Management mode is meant (is dedicated) for running Kubernetes on Vsphere ?

How do you plan to run this Workload Management mode in MultiSite environments ?

AFAIR the limitation of just one Edge Cluster per TZ and requirement for Active-Active T0 kinda goes against MultiSite requirements for the NSX-T.

Will you run the scenario of connecting this K8S cluster with the environment on the Public Cloud ?

Hey Zibi,

So, to begin with, the main use-case for VCF 4.0 is ‘Project Pacific’ or ‘Kubernetes on vSphere’, or whatever it gets called on launch. That is what I am working towards, and the idea is to show the how VCF 4.0 is automating a lot of the required infrastructure deployment.

Regarding NSX-T versions, this has not yet been announced, so I cannot publicly state version numbers.

I went with a static route NSX-T Edge deployment as I do not have access to our upstream router. Therefore I cannot setup BGP peering to my T0. If I did, I would probably pick BGP to make routing seamless. Instead, I not have to add static routes to my Ingress/LBs from my desktops/workstations to make everything work. This is where BGP is so useful.

Regarding Workload Management, at present it is only for ‘Project Pacific’ / ‘Kubernetes on vSphere’ – that is correct.

I don’t know about multi-site support – I guess the question is if we can do something like what we do in PKS with multiple Availability Zones (AZs), and then deploying the K8s cluster across multiple AZs. Let me try to find out what the answer is here.

Regarding the Public Cloud question, I am just trying to show the basics of how to stand up VCF 4.0 at the moment. I’m not sure when I will get to some of the more advanced topics.

Thank you very much for your answers.

Yeah, I guess asking now at this stage of the things for the Public Cloud connectivity is waay too much – it’s just I saw this single T0 Edge Cluster per TZ as the big limitation and I was curious how this will be solved. My use case is bit special and I always need to consider multiple network zones in all my designs.

Would you be so kind and finish your presentation with the demo how you spin up some basic app together with some LCM work on it ?

Yep – part 3 got published just yesterday. I’ll be looking at creating some K8s objects shortly.

Does VCF 4 support a collapsed architecture? (single cluster for management and workload domains)

Not yet – it is something that is being scoped out currently, so I am not in a position to give timelines or anything like that at the moment.

[Update – 1 May 2020] To clarify, when you asked about ‘Collapsed architecture’ aka ‘Consolidated architecture’, I assumed that this was in the context of the main use case for VCF 4.0, namely “vSphere with Kubernetes”. At the time of writing, we do not support deploying a “vSphere with Kubernetes” workload on the management domain. It requires its own workload domain. The ability to do just that is what is getting scoped. In fact, at the time of writing, the management domain cannot be used as a workload domain in VCF 4.0.

If the question was simply to do with running VM workloads/application VMs in the management domains, then this is fully supported, to the best of my knowledge.

Hi Shawn, if you have a use-case for Consolidated VCF 4.0 domain, please let me know if it ok to reach out directly to speak with you about it.

I have one, please feel free to reach out.

Thanks – will do.