vSphere 5.5 Storage Enhancement Part 7 – LUN ID/RDM Restriction Lifted

About a year ago I wrote an article stating that Raw Device Mappings (RDM) continued to rely on LUN IDs, and that if you wished to successfully vMotion a virtual machine with an RDM from one host to another host, you had to ensure that the LUN was presented in a consistent manner (including identical LUN IDs) to every host that you wished to vMotion to. I recently learnt that this restriction has been lifted in vSphere 5.5. To verify, I did a quick test, presenting the same LUN with a different LUN ID to two different hosts, using that LUN as an RDM, and then seeing if I could successfully vMotion the VM between those hosts. As my previous blog shows, this failed to pass compatibility tests in the past. Here are the results of my new tests with vSphere 5.5.

About a year ago I wrote an article stating that Raw Device Mappings (RDM) continued to rely on LUN IDs, and that if you wished to successfully vMotion a virtual machine with an RDM from one host to another host, you had to ensure that the LUN was presented in a consistent manner (including identical LUN IDs) to every host that you wished to vMotion to. I recently learnt that this restriction has been lifted in vSphere 5.5. To verify, I did a quick test, presenting the same LUN with a different LUN ID to two different hosts, using that LUN as an RDM, and then seeing if I could successfully vMotion the VM between those hosts. As my previous blog shows, this failed to pass compatibility tests in the past. Here are the results of my new tests with vSphere 5.5.

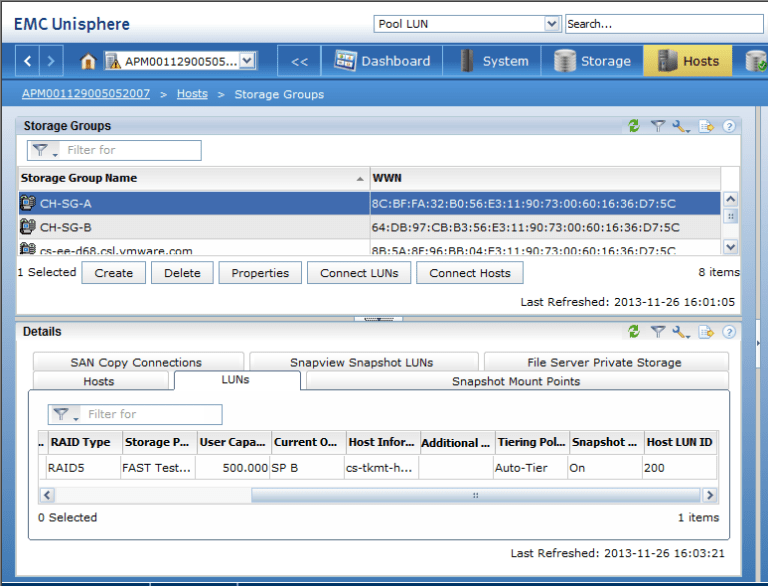

For this test, I used an EMC VNX array which presented LUNs over Fiber Channel to my ESXi hosts. Using EMC’s Unisphere tool, I create two storage groups. The first storage group (CH-SG-A) contained my first host, and the LUN was mapped with a Host LUN ID of 200 – this is the id that the ESXi host see the LUN.

My next storage group (CH-SG-B) contained my other host and the same LUN, but this time the Host LUN ID as set to 201.

Once the LUNs were visible on my ESXi hosts, it was time to map the LUN as an RDM to one of my virtual machines. The VM was initially on my first host, where the LUN ID appeared as 200. I mapped the LUN to my VM:

I proceeded with the vMotion operation. Previously version of vSphere would have failed this at the compatibility test step, as per my previous blog post. However, this time on vSphere 5.5, the compatibility test succeeded even though the LUN backing the RDM has a different LUN ID at the destination host. I completed the vMotion wizard, selecting the host that also had the LUN mapped (you can only choose an ESXi host that has the LUN mapped) and the operation succeeded. I then examined the virtual machine at the destination, just to ensure that everything had worked as expected, and when I looked at the multipath details of the RDM, I could see that it was successfully using the RDM on LUN ID 201 on the destination ESXi host:

So there you have it. The requirement to map all LUNs with the same ID to facilitate vMotion operations for VMs with RDMs has been lifted. A nice feature in 5.5.

Note: While a best practice would be to present all LUNs with the same LUN ID to all hosts, we have seen issues in the past where this was not possible. The nice thing is that this should no longer be a concern.

Hi Cormac

Does this now works with MS Cluster, as its a shared LUN in the past it failed with errors?

I haven’t tested it, but I don’t see why not.

However, if the docs still state to keep them with the same presentation id, follow the docs 🙂

This would drive me crazy. I cannot operate in an atmospere of inconsistent LUN numbering without a good reason behind it.

Agreed. But not everybody pays as much diligent attention to detail as you do Jas 🙂

Hello Cormac – great post!

I have had VML mismatch issues in the past and actually, currently have an odd one I’m trying to resolve. Unfortunately, my problem isn’t as simple as an inconsistent LUNID.

I’m curious what exactly the change is – whether vMotion of RDMs no longer requires identical VML addresses (a change made long ago for VMFS datastores), or if the VML address no longer uses the LUNID when it’s created. If the VML address is different, and it migrated anyway, that would be a great piece of information for me.

Thanks!

Brent

If I understand correctly, we now mask out the part of the VML that references the LUN ID.

There are many reasons that the VML may change – it could be settings on the port of the array for example.

If you are having issues with the same LUN showing different VMLs on different hosts, I’d file an SR and get some assistance.

Paudie left some very good VML information in the comments of this post – http://cormachogan.com/2012/09/26/do-rdms-still-rely-on-lun-id/

Does the VC and ESXi BOTH need to be on version 5.5? Or just the VC server to 5.5?

Will it work if the ESXi is on earlier version than 5.5? (i.e ESXi 4.1 or 5.1)

I’m also curious about this. And another thing – what about an environment with pre-existing RDMs in which vCenter has been upgraded to 5.5? I suspect the only requirement is vCenter 5.5, but it would be great if someone could verify this.